Does Convergence Matter? YES!!!! An un-converged solution, even if optimal, is not likely to be real world feasible. In operations research when an iterative optimisation method, such Successive Linear Programming (SLP), is said to converge, what is meant is that the algorithm reaches a stopping point. If you apply the rules for making the next iterate, the resulting approximation, or at least the answer it gives, will stay the same. And so you can stop. When running a model to generate a refinery plan, however, we are interested not just stopping and looking at the overall value. We have a more practical concern with the details of the result. For us, the importance of convergence is as a signal that we have found a solution that could applied to running a plant. Many of the recursion passes will give solutions that are optimal for that linear approximation – but it is only a feasible solution to the actual non-linear problem if it is converged. An unconverged solution will have within it some inconsistency that would prevent a plan based on it from actually working. We need solutions that are both internally and externally consistent.

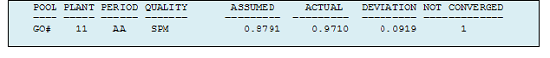

Internal consistency means that related values within the matrix and solution should match. This is primarily relevant to the distributed-recursion formulation* that handles pool qualities. The quality values attributed to blended pools and contributed by them to products must equal those brought in by their components or the unit operations which made them. Here is a line from the pool property log (extracted from the LST file) for an unconverged solution.

The ASSUMED value was put in the matrix for any equations where this sulphur quality value was needed. It would have been used, for example, in the specification equation for the products that this gas oil pool blends into. The ACTUAL value has come from the optimal solution to the current approximation, and reflects how the pool has been made. The two numbers don’t agree so the product blending is being done with less sulphur than is actually there. A product might even appear to be on spec when it is actually not.

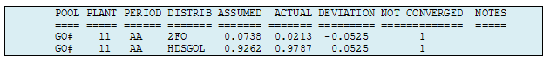

With distributed recursion, information about the error in the quality assumption can arrive at the destination via the distribution factors and so provides some correction to the assumed value. Below is the distribution log for this same solution. The ASSUMED distribution factors tell us that 7.38% of the missing sulphur due to the error was blended into the 2FO product and the rest passed into the HDS process unit feed quality balance.

But the ACTUAL column indicates that only 2.13% of GO# blended into 2FO, so it is receiving too much of the sulphur, while the HDS unit has run as if the sulphur were lower than it actually is. Clearly, this is nonsense. The gasoil has the same sulphur wherever it is used. if you took the instructions for making GO# from this solution then blended and processed it in the amounts indicated, it would not work out as planned, because this solution relies on the sulphur being different in the two places where it is used.

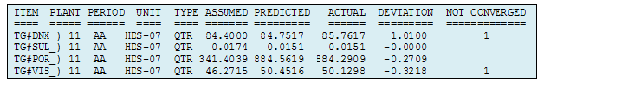

External consistency is a concern for the Adherent Recursion feature of GRTMPS, where non-linear simulators are used to generate dynamic delta-base models of process units and complex blending relationships. We need a good match between the linearized predictions for process unit outputs and the process simulator calculations at the operating conditions and feed qualities selected by the optimization. Here is an extract from the Process Unit Non-Linear Calculation Log in the LST file, for an unconverged solution. The ASSUMED value for each quality was determined by running the simulator for the HDS unit before generating the matrix.

The PREDICTED value is the result of the optimization, reflecting how that ASSUMED value changes when the inputs to the calculations – such as the feed qualities - change using a slope also calculated via the simulator before generating the matrix. The ACTUAL value is what is returned from the simulator after the optimization has completed, using the input values from the solution that has just been found. If the PREDICTED and the ACTUAL don’t match well, then the LP and the simulator are inconsistent. If the simulator is a good predictor of actual unit behaviour, then the LP is also inconsistent with reality and the plan won’t work.

The convergence tests used in GRTMPS are designed to insure that the solution is both internally and externally consistent before it is accepted. If not, it will try again. Note that the number of passes it takes to find a converged solution is not an indicator of the quality of a solution – although it is, of course, more convenient to solve models quickly.

What If the Objective Value isn’t Changing?

Sometimes an optimization will carry on for many passes without any apparent change in value. From a mathematical point of view, this might be taken as evidence of convergence. However, from a practical point of view, we need to pass the consistency tests in order to have a workable plan. The iterations may simply be moving between different unacceptable solutions that simply happen to generate the same value. I therefore never use the objective function tolerance test.

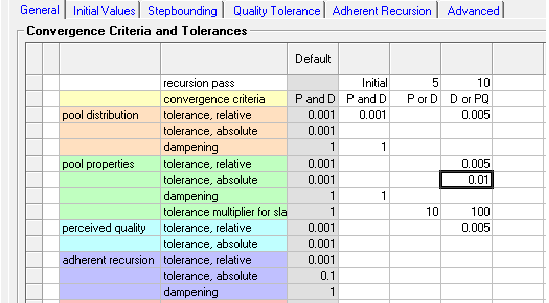

It is possible, however, that a stable value is an indication that there is a good enough solution there, which you are simply not accepting because your criteria are too tight. If you are not using the “D or PQ” convergence criteria (RCMINOR -1) you should try that, as it is more likely than the other settings to identify a usable level of internal consistency in the pool qualities. (I am planning some further articles on these convergence test options.). The default tolerance values (0.001) are designed to be tight, so we don't mistakenly accept a poor solution. Relaxing them a bit (<=0.05 say) is probably reasonable for most properties. With some qualities, like Flash that are hard to measure, you might even allow a 2 or 3 degrees absolute difference. The Slack Tolerance multiplier (RCSLACK) is a good option, as it relaxes the tolerances select the default values (0.001) are intended to be very tightively on the pool qualities which don’t have any economic pressure. Just relaxing the tolerances a lot (i.e. 100) until the solution reports as converged is cheating and might leave you with a visibly inconsistent report. (Abraham Lincoln is said to have once been asked how many legs a sheep had if you called the tail a leg. “Four”, he replied, “Calling the tail a leg doesn’t make it one.” )

This is typical of what I set up for convergence testing for our demo models. Full refinery models are also likely to have property specific tolerances on things that are hard to predict / measure, like Flash point, and special values for properties that are less typical in magnitude (like sulphur ppm).

(*) Not familiar with Distributed Recursion? Have a look at Article 4: How Distributed Recursion Solves the Pooling Problem.

From Kathy's Desk 4th April 2017.

Comments and suggestions gratefully received via the usual e-mail addresses or here.

You may also use this form to ask to be added to the distribution list to be notified via e-mail when new articles are posted.